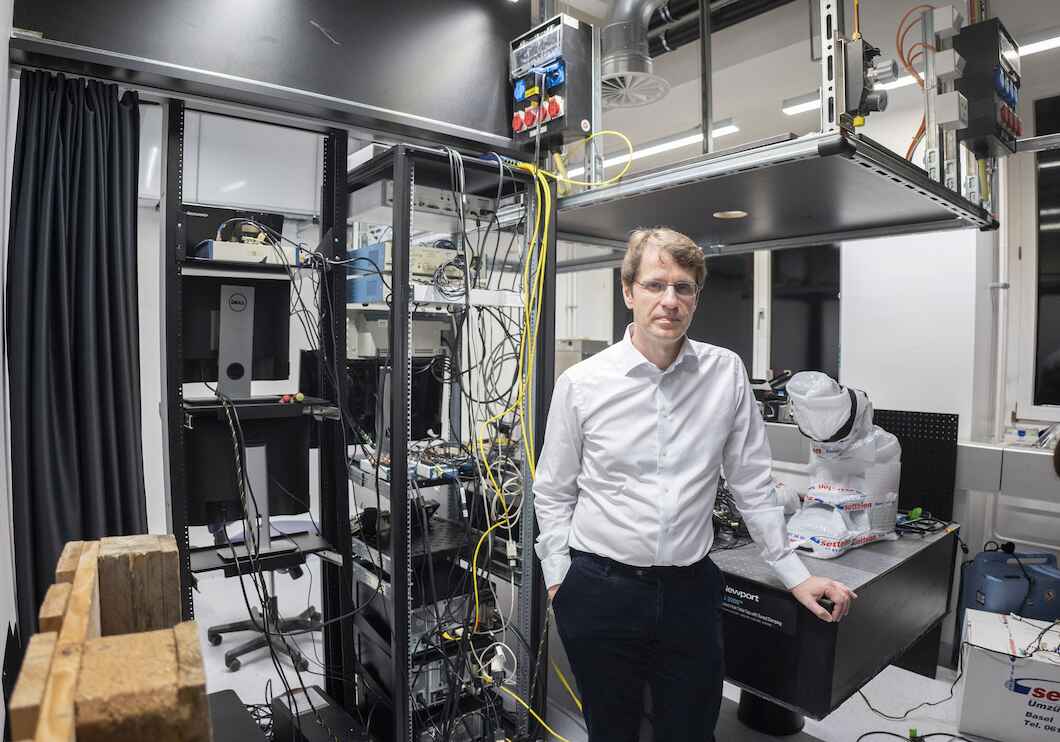

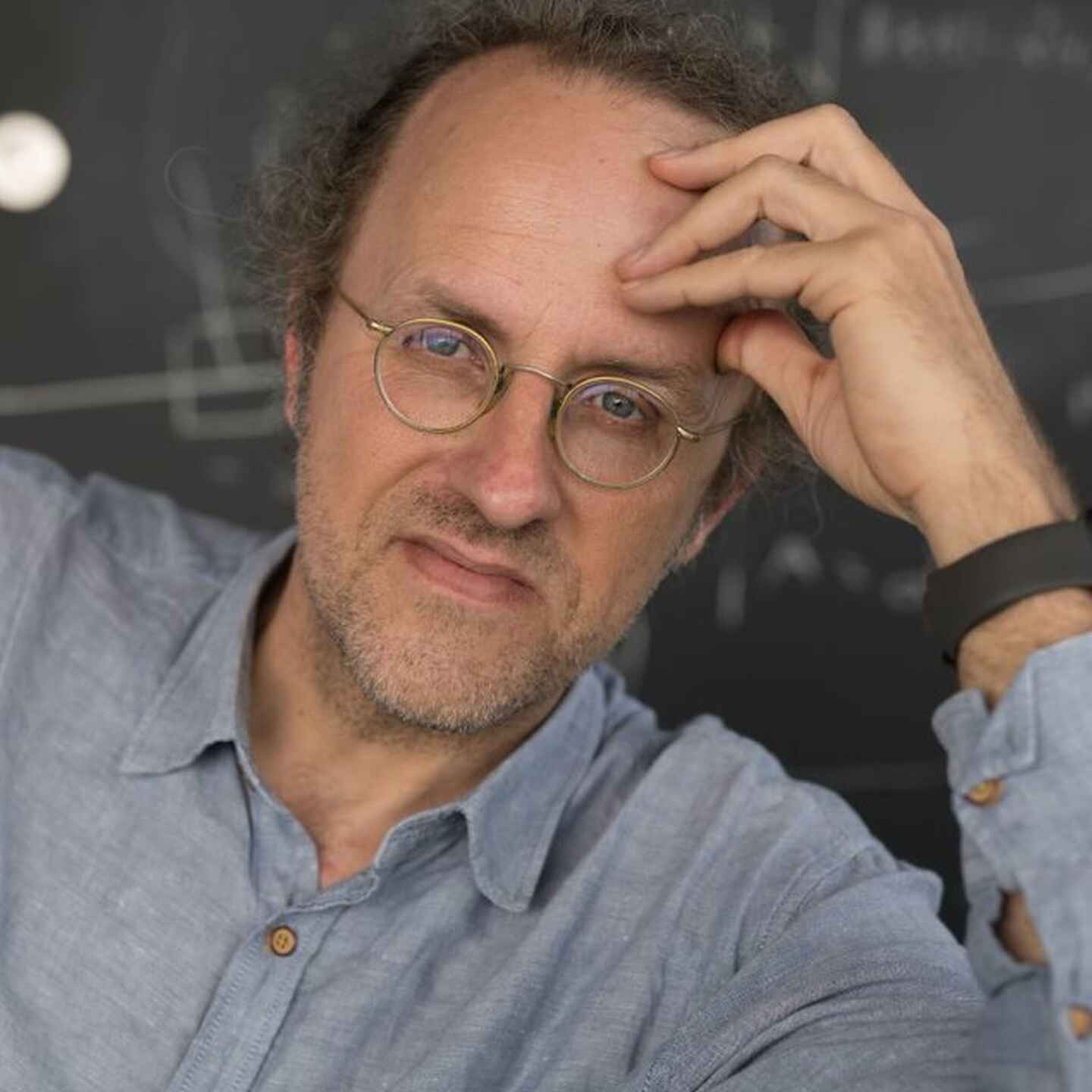

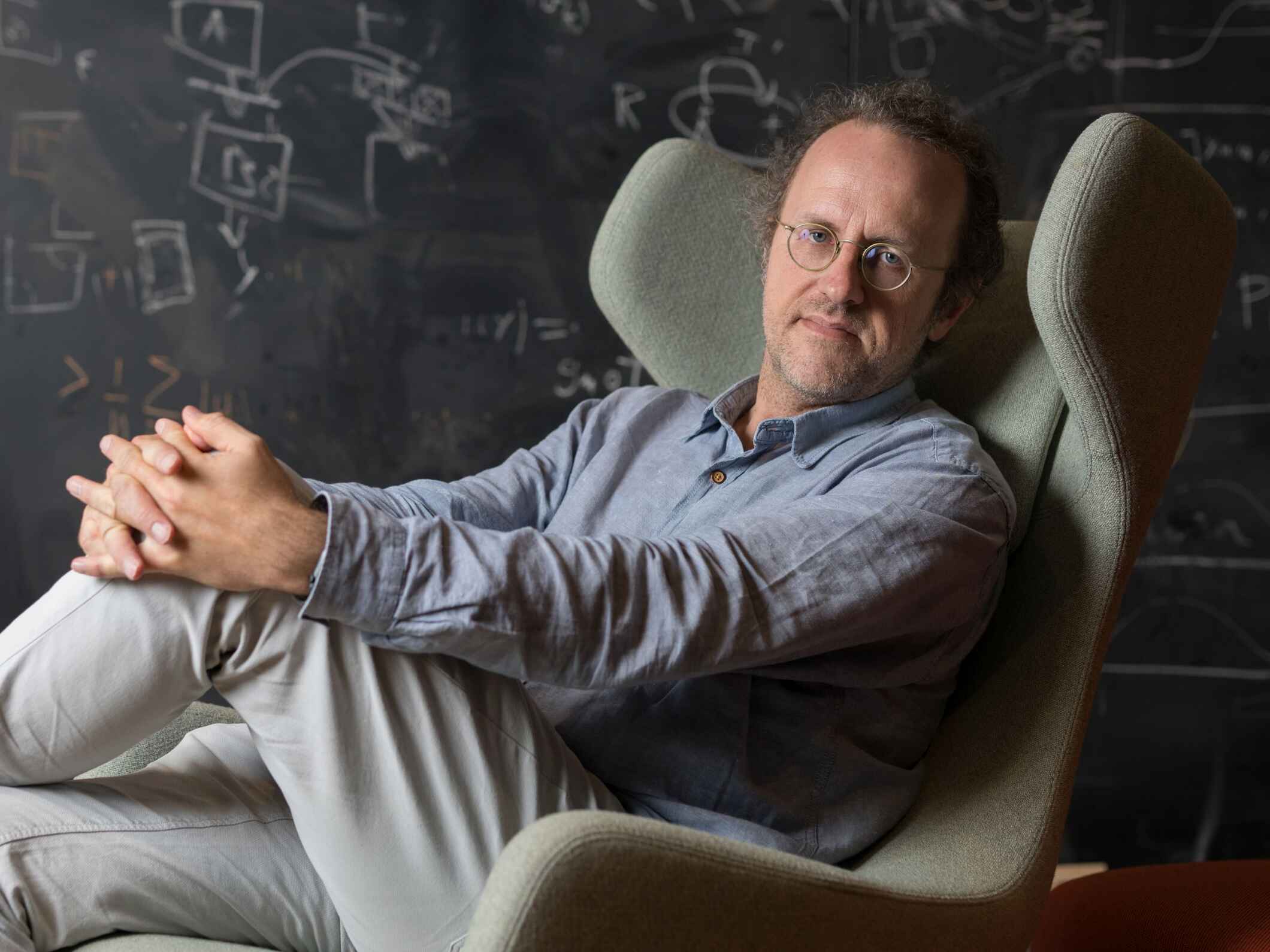

Bernhard Schölkopf (2019):

The Computing Tricks of Artificial Intelligence

Bernhard Schölkopf developed mathematical processes that constituted a significant contribution to helping artificial intelligence (AI) soar to new levels recently. The German physicist, mathematician, and computer scientist achieved worldwide renown with what are called support vector machines and kernel methods. These are no machines in a classical sense but sophisticated algorithms (program instructions) with which computers can perform highly complicated AI calculations quickly and precisely.

The Computing Tricks of Artificial Intelligence

Although almost everyone has daily contact with it, about half of the Germans do not know what is meant by the concept artificial intelligence. “AI is at play when the smartphone automatically groups the stored photos according to faces and topics such as vacation or translates texts from one language into another”, Schölkopf explains. Digital language assistants such as Alexa, Siri, and Cortana that respond intelligently to spoken language also utilize AI algorithms. AI is currently experiencing a worldwide boom, not the least because of its strongly growing economic signifi cance. The USA and China are investing billions in this technology, which will fundamentally change working life. Intelligent robots moved into factories, such as in the automobile industry, on a large scale even before the turn of the century. In the future, intelligent systems will increasingly perform routine tasks in offices.

“If a 30 speed limit sign within the city limits has been altered to look like a 120 sign, then the AI system of a self-driving car must be able to conclude that the sign is to be ignored.”

Bernhard Schölkopf is a pioneer in this “third industrial revolution”, as he refers to it. “he first was based on energy stemming from water power and steam, and the second on energy from electrification. The third and present one replaces energy with the concept of information.”

Expert systems provided the foundation for the discipline of computer science

There are many different approaches for intelligent computer systems. As early as in the 1950s US scientists were conducting research on AI. They simulated the thought processes of human experts in what were called expert systems, which were fed if–then rules. “From the stored rules If it rains, the street is slick” and “If the street is slick, it is easy for cars to skid”, the expert systems were able to derive independently “If it rains, it is easy for cars to skid”. This was, of course, a purely formally logical act of computerized data processing. The systems did not have any idea what a street or a car is. Nonetheless, the development was accompanied by an enormous amount of hype. The AI legend Marvin Minsky from the Massachusetts Institute of Technology asserted at the time: “We humans will be lucky if intelligent robots still keep us as their pets in 50 years”. Little was left of all this half a century later. Nonetheless, AI researchers did develop the first high-level programming languages for computers (e.g., Lisp), which are easier for programmers to understand than pure machine code consisting of zeroes and ones, thus laying the foundation for the field of information science.

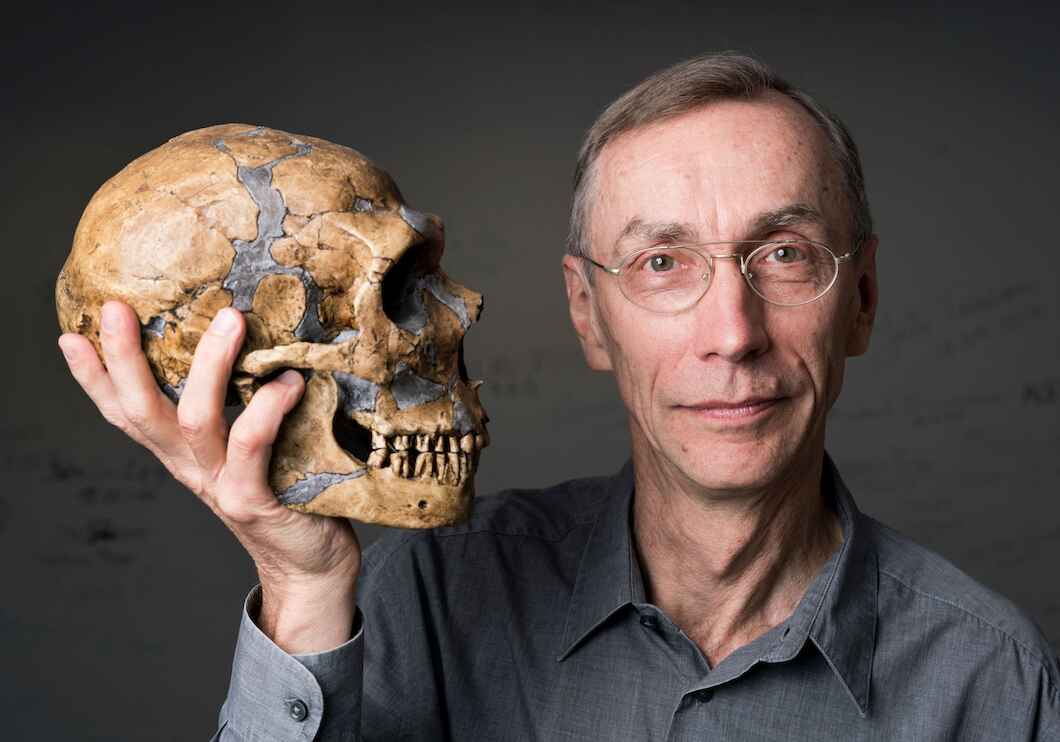

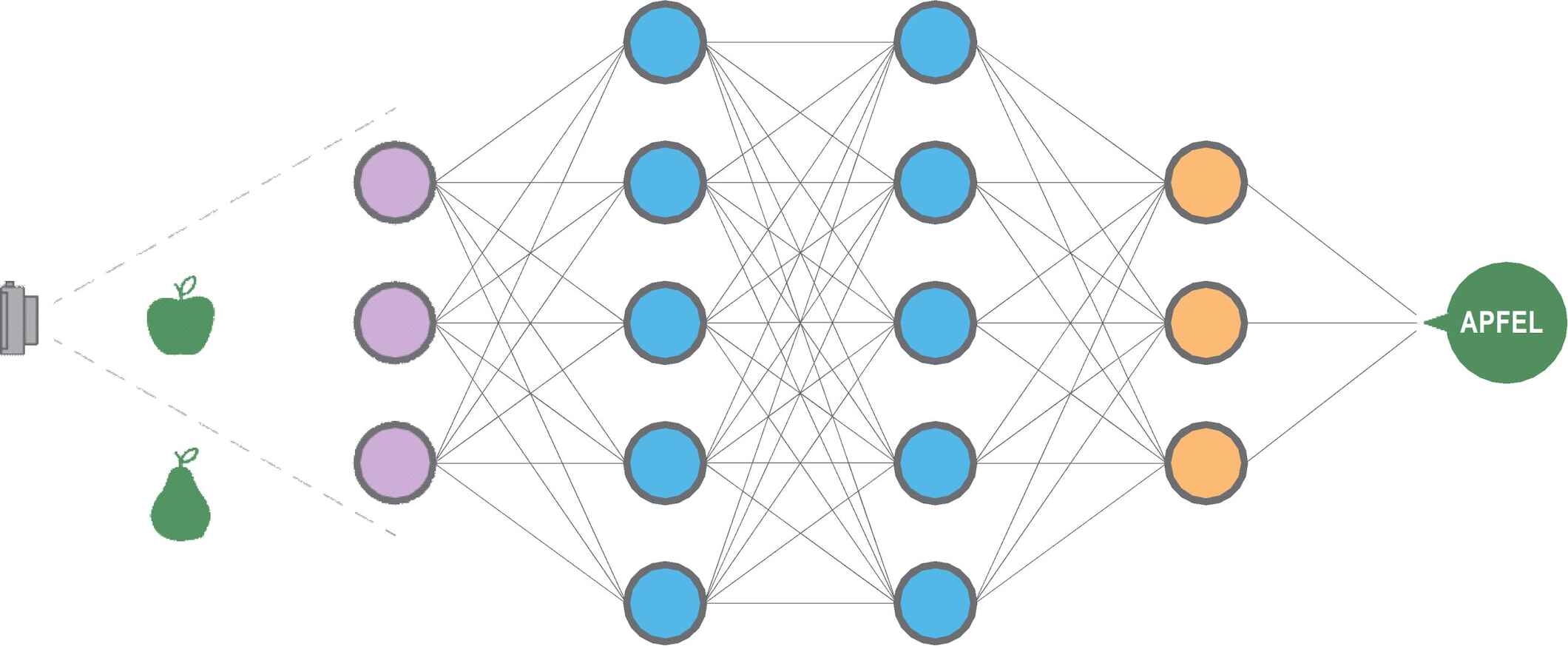

Far more successful since the mid-1980s were the socalled artificial neural networks, whose structure is remotely oriented on the human brain. They have artificial neurons that are arranged in several layers. They are not fed expert rules but learn to solve tasks more like a child does: A data scientist trains a neural network stepwise by showing it, for example, pictures of pears and apples. Initially, the network guesses which of the two types of fruit is meant. The data scientist checks the results and communicates to the system whether it was right or wrong. The neural network gets better and better in the course of the training. The network stores the ›knowledge‹ that it acquires in its artificial neurons, which are linked to one another like synapses in the brain via excitatory or inhibiting weights. After many thousands of rounds of training, the weights are adjusted so that the neural network can´now distinguish apples from pears on new images that it has never seen before.

In 1997 an AI system defeated the reigning chess world champion

The most recent breakthrough in AI goes back to those methods of machine learning whose performance has greatly improved thanks to ever faster computers, ever larger memory, and rapidly growing amounts of data for training. Today neural networks control, for example, driverless cars that they autonomously steer with the aid of data from cameras and sensors and automatically brake upon recognizing obstacles.

In many games AI systems have become far superior to their human competitors. Already in 1997 Deep Blue—a chess computer built by IBM, which utilized a giant knowledge base of championship games and was able to calculate 200,000 moves per second—defeated the then chess world champion Garry Kasparov under tournament conditions. The world champion in the complex Japanese board game Go was defeated by a system named AlphaGo that was developed by the Google firm DeepMind and that was based on a neural network trained on championship matches.

Support vector machines provide particulary precise results

The support vector machines that Schölkopf helped to develop work similarly to neural networks but provide more precise results for some tasks. Furthermore, they are based on a solid mathematical foundation, which makes their functioning more transparent.

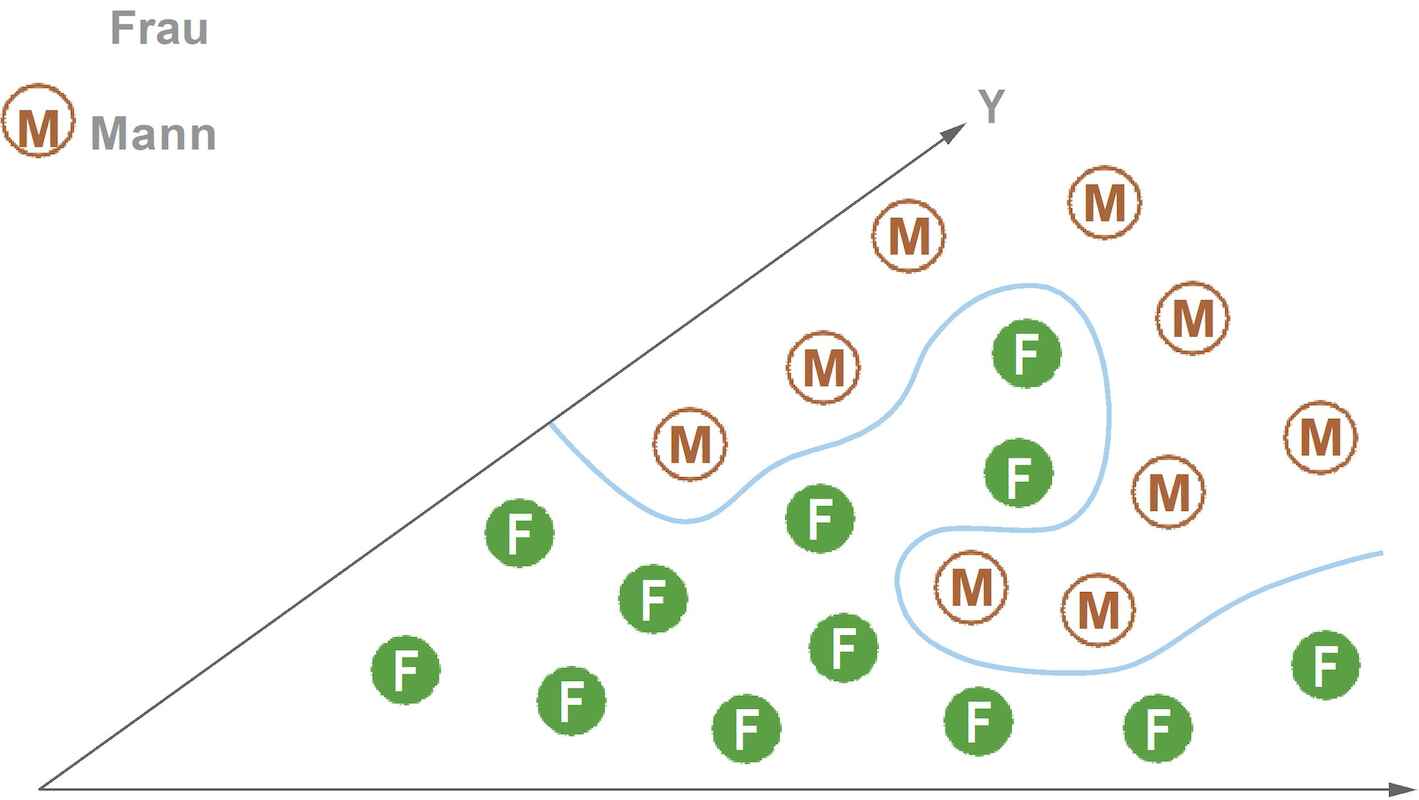

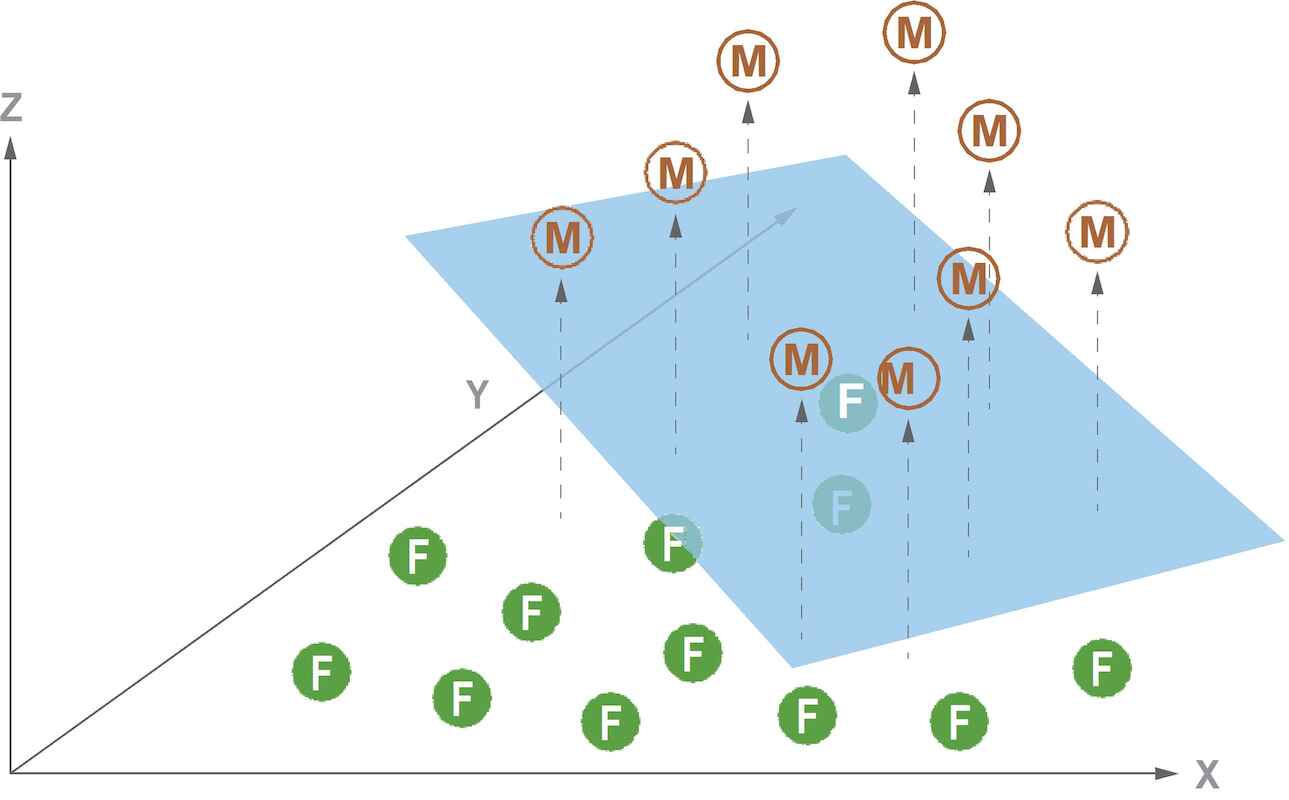

“A simple task for a support vector machine would be, for example, to determine on the basis of entries for body size and weight whether a person is a man or a woman”, explains Matthias Bauer, a doctoral candidate in Schölkopf’s MPI team in Tübingen. The system presents the results mathematically as vectors, which you can imagine as two clouds of dots (one for women, one for men) in a two dimensional coordinate system. Ideally, the two clouds can be separated by a straight line. A straight line is referred to as a linear solution, which can be calculated particularly quickly. Yet since there are also some small, light men and heavy, large women, a few of the points land in the wrong cloud.

To be able to separate the clouds anyway, a nonlinear wavy line would have to be used, which however is much more complicated to calculate.

The remedy employed by the programmer to separate them is to go into the third dimension, as it were. With the aid of a mathematical algorithm—a kernel function that can be chosen at will—he raises or lowers the points in the two-dimensional level so that they now float at different heights in three-dimensional space. “As a control value for the height, one can for example take one third of the weight plus four times the size”, Bauer explains. A skillful selection of this kernel function makes it possible subsequently to use a plane to separate the points floating in three-dimensional space. With regard to three-dimensional space, the plane represents a linear solution. This procedure can be enhanced at will. The points (vectors) can also be transformed into a multidimensional space (or even an infinitely dimensional one). The linear `separating hyperplane´ is then in each case one dimension lower.

Every separating plane is defined by several selected vectors, i.e., by those that are nearest to it. For this reason they are also called “support vectors”. You can visually imagine them as stilts that support the separating plane from both sides (without actually touching it). Referring back to the previous sample system, if a pair of values for size and weight is now fed from an unknown source, the support vector machine compares the vector derived from these values with the support vectors that identify the male–female border, in this way quickly determining the sex.

A support vector machine represents men and women whose height and weight has been entered as points in a 2-D level. The clouds of points representing men and women cannot be separated linearly using a separating line but only with a wavy line.

Remedy is provided by a mathematical algorithm (kernel function) with which the points that were previously in the 2D level are raised varying amounts in 3D space. There it is possible to cleanly separate them using a 2D separating plane (blue) that—relative to the 3D space—represents a linear solution.

The decisive trick behind support vector machines is that while the separation of the points takes place cleanly in multidimensional space, the computer does not need to conduct the associated complicated and cumbersome vector calculations. The reason is that the systems work with the scalar products of these vectors, which by definition are numbers and can be easily calculated by the computer.

“If our sample system continues to make too many mistakes, such as classifying too many females as males and vice versa, the programmer has to make adjustments to the kernel function”, Bauer explains. “The beautiful part of the kernel function is that it is a mathematically precisely defined value and thus a precise setscrew”. In comparison to it, a neural network is an intransparent black box whose parameters—created in a purely statistical manner during the training—lie hidden somewhere in the network weightings.

Bernhard Schölkopf is the most frequently cited german computer cientist

Support vector machines had their greatest successes during the 1990s. At the Bell Labs, Schölkopf and Vladimir Vapnik together developed a system that could recognize hand-written numbers on letters almost as well as a human—and better than all the competing systems in cluding neural networks. In 1997, a support vector machine succeeded in automatically evaluating 21,450 news reports from the Reuters News Agency and classifying them into 135 categories such as sports, business, and politics.

As a result of their mathematical approach, support vector machines gave computer science and especially its subdiscipline machine learning a significant boost. Bernhard Schölkopf is the most frequently cited German computer scientist and belongs, according to the American research journal Science, to the ten most influential computer scientists in the world.

“Decisive for the great advances made in machine learning are, above all, the immensely in-creased amounts of data, called big data in the US”, Schölkopf says and explains this by referring to the following example. A support vector machine could, for example, be given the task of detecting the biologically important classes in segments of DNA, our genetic material. “As long as we only have a limited amount of training data, say a few thousand, the precision is very low. We humans would presumably only look at a couple of thousand at most. This would not be sufficient for us to recognize patterns. But with large amounts of data, e.g., 10 to 15 million, the precision increases. A support vector machine can then detect structures that a human would never be in a position to find.”

“Crucial to the great advances in machine learning are above all the huge amounts of data called, in the US, Big Data.”

Bernhard Schölkopf

AI technology works but is clearly not yet perfect. “A neural network can for example recognize a cow on most images without any difficulty”, Schölkopf says. “But it has problems if the image of a cow is shown on an ocean beach. This is a result of the training data, which usually show cows on meadows. The system is led astray, as it were, by the fact that the cow is in an inappropriate environment, and the system does not recognize the cow since it only pays attention to correlations, ignoring causality”. According to Schölkopf, future AI systems “should also understand causality: Thinking is, according to Konrad Lorenz, nothing but acting in an imagined space. The repre sentations that we learn should reflect an understanding of how the world reacts to our actions. This goes beyond the statistical methods that are the foundation of the present methods.”

Mistakes also occur, for instance, in the automatic processing of online credit applications. Over and over again it happens that AI systems refuse credits although the borrower shows he is fully creditworthy. Experts believe that such mistakes will never be completely eliminated because different computer scientists often have differ ent opinions as to which algorithm is best suited for which application. Furthermore, the sensors in autonomous vehicles can, for example, age or get dirty.

AI systems will soon revolutionize office life too

Following the robotization of factories, AI systems will subsequently revolutionize office life. In the meantime, they have come to ›understand‹ the contents of documents without any difficulty and file them automatically. In a similar manner, they filter spam emails. The systems can even process simple insurance claims. In quality control in manufacturing they discover the smallest defects in a flash, defects that humans could easily overlook. In medicine they detect tumors on x-rays or CT images as accurately as human experts. The editorial offices of several well-known US newspapers and agencies such as the Associated Press even already let robot journalists prepare simple standard reports from the fields of business and sports.

Schölkopf’s team at the MPI Tübingen is currently conducting research on algorithms that can also recog nize causal relationships in data. This new, promising field of research is called causal inference. One of its goals is to make AI systems more robust against inter ference. “If a 30 speed limit sign within the city limits has been altered to look like a 120 sign, then the AI system of a self-driving car must be able to conclude that the sign is to be ignored”, Schölkopf explains. Self-driving cars in the US have in fact already caused several deadly accidents.

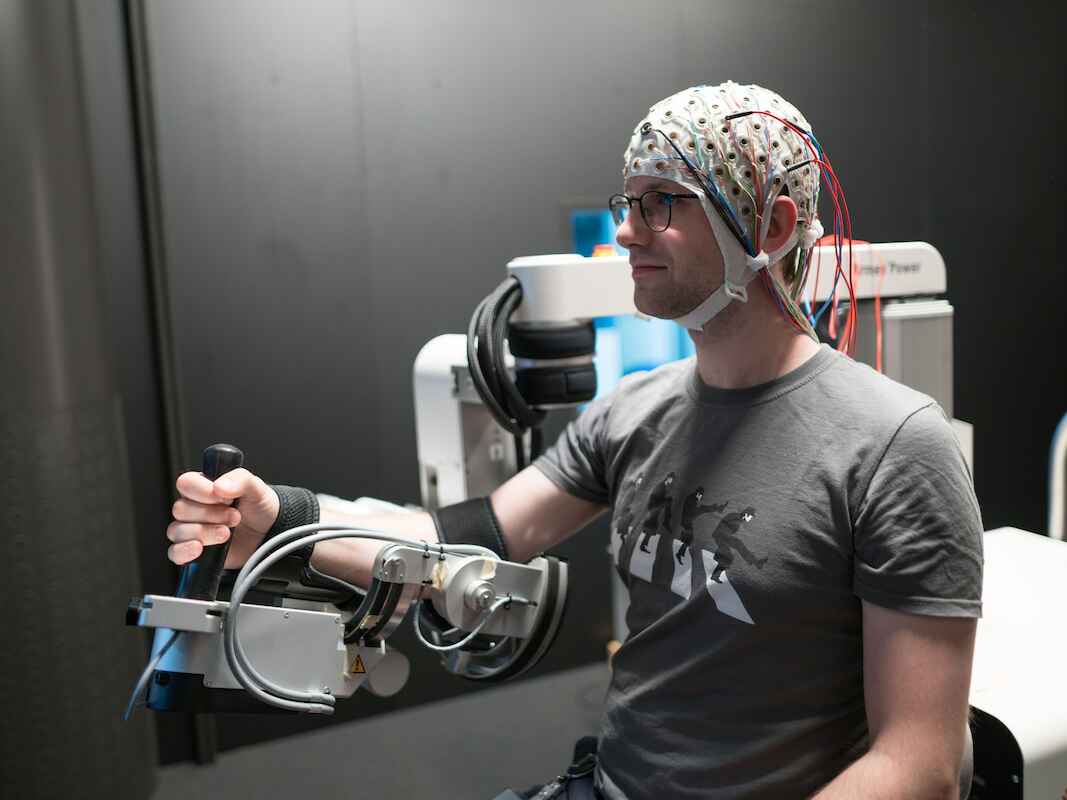

Simon Guist, a doctoral candidate at the MPI Tübingen, trains an artificial arm to return virtual balls.

New AI systems even train themselves

The direction of the newest trend in machine learning is for the systems not to be trained elaborately, sometimes employing millions of data. On the contrary, they are to recognize patterns, structures, and rules completely independently. Following supervised learning (with training) we now have unsupervised learning.

The Google firm DeepMind achieved a spectacular success in this subdiscipline in 2015. (DeepMind is a startup grounded in London in 2010, which Google purchased in January, 2014.) The DeepMind researchers had created a neural network that independently learned to play against 49 classical computer games from the 1980s that ran on an Atari 2600 console. To do this, the team used the so-called deep learning procedure, in which a neural network is used that has a particularly large number of neurons and layers. As input, the network was given the color pixels of the respective video game and the score as displayed. As output, the network produced joystick movements. The algorithm was programmed so that joystick movements that raised the score were rewarded. At the beginning, the network moved the joystick at random, and often wrongly. Little by little it learned, however, to optimize the movement so that the number of winning points increased. After many thousands of games the network was good enough that it played as well as human competitors. It had taught itself the rules.

A Schölkopf team is working on a table tennis robot, which continuously improves its playing proficiency as a result of imitation and training. One day it should be able to hold its own against human players.

At the invitation of the prestigious British science journal Nature, Bernhard Schölkopf wrote about this in an article entitled “Learning to see and act”. What especially impressed him was that the DeepMind researchers had the network play against itself after the initial learn ing phase. “They saved the experience the system had already gained and used this in a game against itself to train further. This is similar to procedures that take place in the brain’s hippocampus during sleep”. The brain re searchers May-Britt and Edvard Moser, who were awarded the Körber Prize in 2014, shortly before re ceiving the Nobel Prize, had indeed determined in experiments with rats—that ran through a labyrinth during the daytime—that during sleep at night the rodents recapitulated once again in their hippocampus the daytime trial runs. Such dreamed recapitulations result in a synaptic consolidation of what has been learned. In AI research, this process is designated “reinforcement learning”.

There are even several Schölkopf teams working in this new field of research on reinforcement learning. In the basement of the Tübingen Institute there is a table tennis table with two robotic arms. Initially the arms cannot do more that hold the paddles. They have no idea how to play table tennis, but are supposed to learn this on their own by the trial-and-error method. “We will feed it with learning software that enables them to learn from their own mistakes and to draw the right conclusions”, Schölkopf says. “Then they can practice all night, and the next morning they will already play much better”. The team hopes that the table tennis robot will one day be so powerful that it can beat human players.

As an AI Location, Germany has to prove itself against though competition from the USA and China

Thanks to the rapid advances, AI has also gained signifi cantly in economic relevance in the last few years. “Researchers here are very concerned that countries such as China and the USA invest more in AI and put much more emphasis on strategic thinking”, Schölkopf says. He is actively involved in strengthening Germany as a location for AI and helping it achieve a top position despite the stiff international competition in AI. Schölkopf is a cofounder of the Cyber Valley in the Stuttgart–Tübingen region of Germany. This is a competence center supported by the state of Baden-Württemberg which has managed to enroll large IT companies from the USA in addition to German technology corporations and which has already attained worldwide renown.

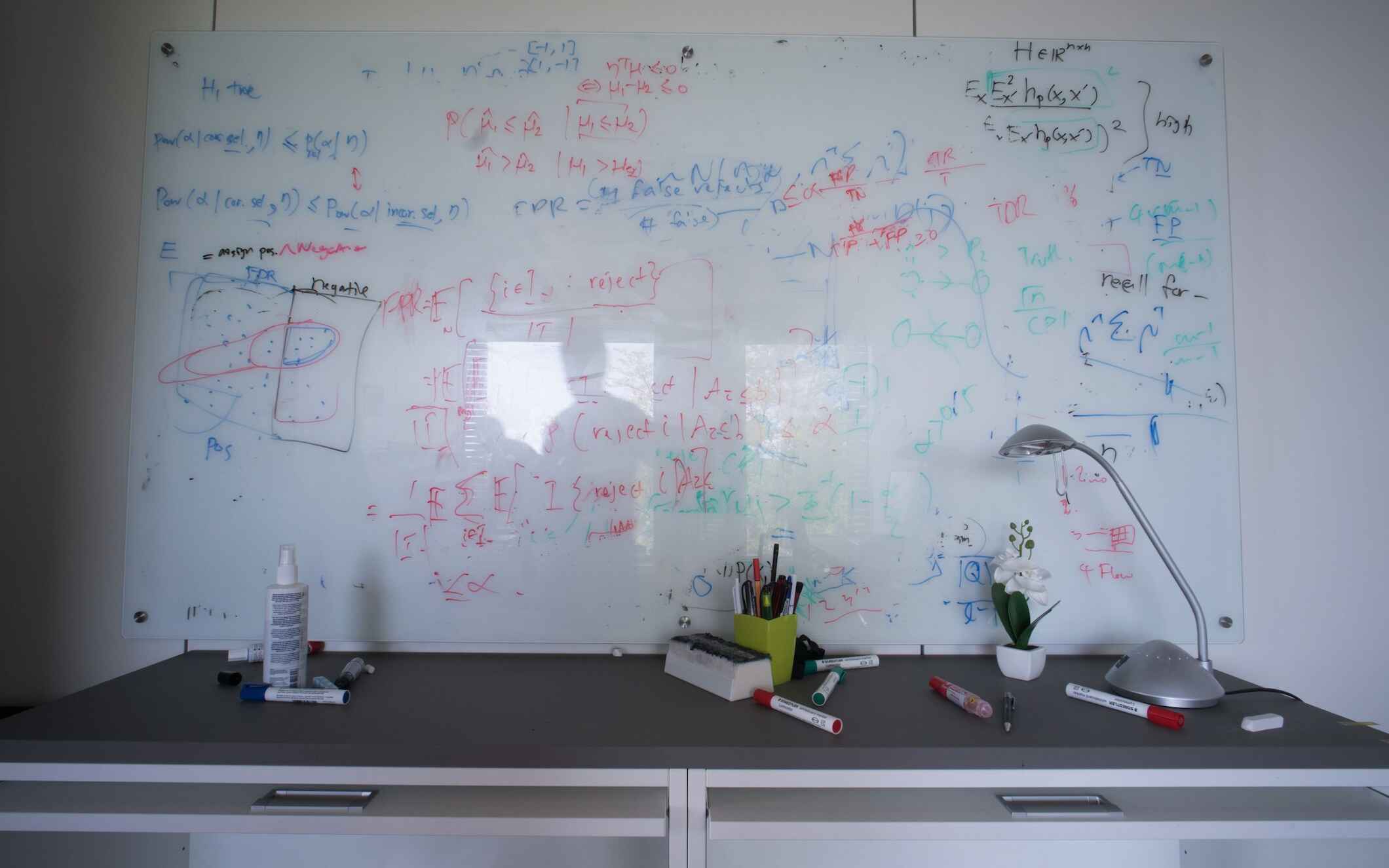

Creative working atmosphere: The doctoral candidates Chaochao Lu and Arash Mehrjou program complex algorithms in their office.

The ›Corner Room‹ at the MPI in Tübingen serves as a place of peace and reflection, as long as no one is grasping for the electric guitar.

As measured by the number of scientific publications on the topic of AI, Germany has already fallen behind in international comparison. “There are German coauthors for only 3% to 4% of the articles presented at top conferences”, Schölkopf says. A good 10 years earlier it was still 10%. China in particular is catching up. In 2017, 48% of all the funding for AI startups worldwide was invested there. In a Fortune list of the 100 leading AI startups there is not a single one from Germany.

“Whoever wants to secure themselves a leading position in AI in the medium term should”, says Schölkopf, “on the one hand make wide use of the AI methods that are available today—by promoting startups, technology transfer, and applied research in Fraunhofer institutes and at the German Research Center for Artificial Intelligence. On the other hand, they should invest strategically in basic research since AI hotspots such as those in Silicon Valley or Cambridge develop at locations where there is excellent basic research. The learning techniques of tomorrow are being discovered today. Machine intelligence needs not only data but above all heads”. For this reason, the company with the best self-driving cars will “not be the one with the best patents or the best secrets, but that with the best engineers, the best machine-learning people”.

“Machine intelligence needs not only data but above all heads.”

Bernhard Schölkopf

Local AI hotspots in europe must be strengthened

Until now Europe has lagged behind because large IT companies and elite universities, especially from the USA, have consistently attracted the most talented. Google is currently financing over 250 research projects in the area of deep learning. Most strongly affected by this are our public research institutions. Schölkopf: `Many of our prospective Ph.D.s have offers from American companies or elite universities. That is where professors from Europe are working who have become superstars and successful entrepreneurs in the USA. And young scientists want to learn where the best are doing research´. It is therefore decisive to strengthen local hotspots in Europe—such as Germany’s new Cyber Valley—and to keep the stars of tomorrow in Europe or to get them to come back. Fortunately, the Max Planck Society invested in the future of AI at an early point in time and founded the MPI for Intelligent Systems in 2011, which in the meantime has gained global recognition.

In the framework of the planned ELLIS program (European Laboratory for Learning and Intelligent Systems) Schölkopf wants “to improve the networking between leading European locations, start joint programs, and educate Ph.D. candidates. Young top scientists should not have to go to the USA to work at the highest level”. Schölkopf wants to employ some of the funds from the Körber Prize in the new field of causal inference and for workshops to promote the ELLIS project.

Schölkopf does however see the downsides posed by AI. Some scientists work on intelligent fighting machines that in a war could kill humans autonomously. Schölkopf rejects these military applications of AI energetically and, together with many other AI and robotics researchers, organizes the protest against them.

Schölkopf does think, however, that the widespread anxiety of many people toward AI is exaggerated. “The systems only function in thematically very narrowly specialized areas. Even deep learning networks are quite simple structures compared to our brain”. His colleague Wolfgang Wahlster, founding director of the German Research Center for Artificial Intelligence, agrees with him: “AI is still light years away from ordinary human intelligence. It can acquire common sense only to an extremely limited degree”.

The humanoid robot Athena—a unique model—weighs 75 kilograms and is used to research dynamic running movements.

The prizewinner

Bernhard Schölkopf grew up in Filderstadt, a city near Stuttgart. His father was a master mason and later a building contractor, and his mother a housewife. Friends of his parents would call the rather reticent boy “the little professor”. It was already as a schoolboy that Schölkopf discovered his love of numbers. After graduating from school (Abitur) in 1987, he studied physics, mathematics, and philosophy in Tübingen and London. He was awarded a Ph.D. in computer science by the TU Berlin in 1997.

Formative for Schölkopf’s career was a stipend he received from the German Academic Scholarship Foundation, which enabled him to go to the Bell Labs in the USA. There, he helped his later doctoral supervisor Vladimir Vapnik develop what are referred to as support vector machines to the point that they were ready for use. After receiving his Ph.D. Schölkopf worked at Microsoft Research in Cambridge in Great Britain. That is where he met his later wife, a Spanish illustrator, with whom he has three children. She has published an illustrated book based on one of his ideas, namely about a boy who rides a comet and scatters shooting stars on our planet.

Following a period at the New York startup Biowulf, Schölkopf was appointed Director of the Max Planck Institute (MPI) for Biological Cybernetics in Tübingen in 2001. In 2011he was one of the founding directors of the Tübingen MPI for Intelligent Systems. His strongly mathematically oriented studies on the topic of machine learning have earned Schölkopf world renown. He is the German researcher who is most frequently cited in scientific publications on this topic and has been counted among the ten most important computer scientists in the world. Schölkopf has already been awarded numerous prestigious prizes, such as the Leibniz Prize in 2018.

Furthermore, Schölkopf is a strong promoter of enhancing Germany’s position as a location for artificial intelligence (AI). He is a cofounder of the AI hotspot “Cyber Valley” in the Stuttgart–Tübingen region, where in the meantime research centers have also been established by large American IT companies. In the planned ELLIS program (short for the European Laboratory for Learning and Intelligent Systems), he wants to improve the networking between leading Europeanlocations. Schölkopf’s hobbies include music; he plays piano and sings in a choir. His favorite composer is Johann Sebastian Bach.

Awards Ceremony 2019

The photos of the Awards Ceremony in the Hamburg City Hall are free to use in the context of news coverage with the credits Körber-Stiftung/ David Ausserhofer given below.

The Computing Tricks of Artificial Intelligence